[Work Log] Work Log - silhouettes, training likelihood, evaluating likelihood

Enabled glPolygonOffset and it improved results somewhat. Still getting stippling 10% of the time.

Adding edge-detection code to show stippled edges helps, but adds some internal edges for some reason.

Apparently, we have point spacing too close together. This introduces lots of little ridges, which makes silhouette edges appear on the interior of the object incorrectly. We should pro-process curves in matlab to force spacing to be greater than or equal to to the curve radius.

Likelihood rendering bugs

Image dumps from our likelihood show all edges are being rendered (not just silhouette edges).

seems to be an issue with Camera_render_wrapper. Possibly the y-axis flipping?

Yep. Reverses the handedness of the projected model.

Shader assumes orientation of forward-facing triangles isn't affected by projection. Thus, cross-product can be used to determine whether a face is forward-facing (a fundamental operation for classifying silhouette edges).

Can we solve by doing visibility test in world coordinates, which avoids the projection matrix altogether?

Yes, but there's a bug causing a few faulty silhouette edges to appear. Why?

It turns out, we were assuming the normal vector of opposite faces are parallel, and used this to . Apparently not always the case, especially when sharp corners occur (which happens routinely when point spacing is close).

During testing, we somehow corrupted the GPU's memory. System sprites (e.g. cursor) are starting to be corrupted, and program keeps crashing from GPU errors. Probably constructed too many shaders in a single session. Will reboot and continue.

Summary: found silhouette rendering bug caused by projection matrix that didn't preserve handedness. Rewrote silhouette pass 2 geometry shader to handle this case better. Now renders correctly in both cases.

Next: determine cause of likleihood NaN's; train the likelihood.

Blurred-difference (bd) likelihood issues

Getting "NaN" when evaluating. Checking the result of blurring the model shows inf's everywhere. Weird, because I can confirm that the input data is succesfully blurring:

- Same blurrer is used for data and model

- It isn't the input (tried substituting data in for model and still got corrupt stuff back).

- re-initializing blurrer doesn't seem to help

Stepping through in GDB...

After convolution, values are on the order of 1e+158... clearly invalid. Converting to float causes inf.

Aside: the padding added before convolution isn't zeros! Fixing bug in lib/evaluate/convolve.h:fftw_convolution class.

Aside: Need to remove edges around image border:

Aside: different blurrer for each view? unnecessary!

Wrong, same blurrer, different internal buffers. Caused by calling blurrer.init_fttw_method() every time we add a view to the multi-view likelihood. Removed that call.

Apparently, calling blurrer_.init_fftw_method() is wreaking all kinds of havok on the blurring results. I have idea why. Possibly calling init_fftw_method() more than once is simply not supported. But that call simply destroys and object and creates a new one, so why is that different from the first time we called it?

Theory: fftw wisdom is messing with us?

Results: nope

We have a working case and a non-working case. Can we walk from one case to the other and find the exact change that causes the error?

Aside: Here's a bizarre result wer'e getting during failure:

Kind of cool!

Actually, there might be a hint here... Notice the eliptical shapes that are about the same size as the turntable, but shifted and screwed up.

It's possible what we're seeing is convolution with a random mask. the two main ellipses we're seeing are two random bright pixels in the mask, and the miscellaneous roughness might be ca combinariton of small positive and negative values causing the texture we see. Next step: inspect mask

Bingo! We're working with a random mask.

Let's step back to the original failing case and see if the mask is still random.

Confirmed. Here's an eample mask:

Here it is dimmer:

Anything look familiar? It's derived from our plant image (notice the ellipses from the turntable).

Theory: somehow the mask is getting overwritten with our blurred model.

Solved. Simple bug but was obscured by several issues.

The fundamental issue was that when the blurring mask was being padded, the padding wasn't being filled with zeros. Thus, the padded mask consisted of a small section of real mask, surrounded by huge amounts of uninitialized memory.

This was a bug that I discovered and fixed 12 months ago, but it got rolled back when Andrew revamped the FFTW convolution class. Ultimately, I was able to restore my changes to the FFTW class without much trouble, but for the six hours that followed, these changes weren't being compiled, because they were in a header file, and the build system only rebuilds object files if the "cpp" file is changed.

Further, there was a red-herring that appeared as "re-initializing the blurrer causes the errors". While this was true, it was only because re-initializing also re-initialized the blurring mask, and it was only during re-initialization did the uninitialized mask padding have junk -- on the first initialization, the memory just happened to be blank. Go figure.

This was an absolutely essential bug to find and fix. Glad its fixed, just wish it hadn't regressed in the first place.

Okay time to reset. Let's confirm that the rendered models are blurring correctly. Then confirm non-zero log-likleihood result. Then on to training.

Model blurring: CHECK Finite likelihood: CHECK (result: -3.38064e+08) Likelihood is near-optimal: FAIL. see below

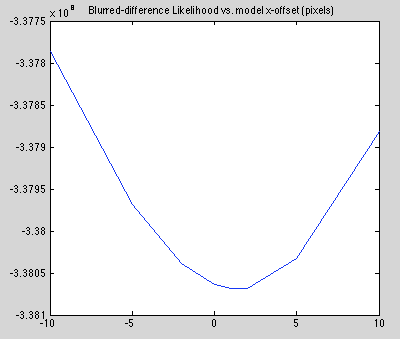

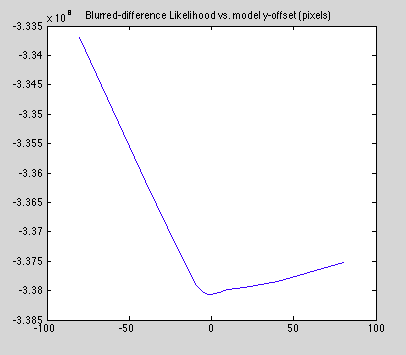

Optimality test

Added debugging option to shift rendering left/right/up/down in the image: --dbg-ppo-x-offset and --dbg-ppo-y-offset. Ideally, the likleihood should be optimized at zero offset

Log likelihood vs x-offset 0 -3.38064e+08 +1 -3.38069e+08 +2 -3.38068e+08 +5 -3.38033e+08 +10 -3.37881e+08 -2 -3.38039e+08 -5 -3.37968e+08 -10 -3.37786e+08

Weird... Why is it at a local minimum at the center?

Log likelihood vs y-offset

0 -3.38064e+08

-2 -3.38062e+08

-5 -3.38033e+08

-10 -3.3789e+08

-20 -3.37321e+08

-40 -3.36139e+08

-80 -3.33693e+08

2 -3.38052e+08

5 -3.38025e+08

10 -3.37983e+08

20 -3.37955e+08

40 -3.3785e+08

80 -3.37523e+08

Note that as y decreases, the top of the plant starts to fall off the screen. This suggests that having fewer model points might be over-preferred. Need to sanity-check the GMM model we're using -- I trained it months ago and never really leaned on it hard, so it's the first place to look for problems.

Also, might be a scaling issue. Double-check that blurred model and blurred data have similar scale.

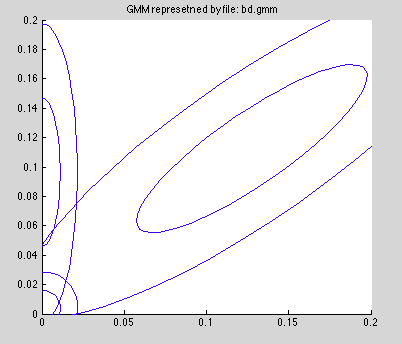

GMM sanity check

Plotting first and second standard deviation of the joint distribution of model-pixel-intensity and data-pixel-intensity.

This looks sensible. The first component is the diagonal one, representing true postives (in the presence of random perturbations).

The second component is at the origin -- true negatives.

The third component is along the y-axis, representing false positives in the data, aka noise.

The first component has roughly 10x less weight than the second and third.

No component for the false negatives (aka missing data). As I recall, running EM on a GMM with four or more components always resulted in redundant components. Possibly hand-initializing the components in each of the four positions might help.

I also recall some sort of GPU limitation that prevented me from evaluating more than three components in hardware. That seems unusual though, and I may be misremembering.

Does this provide any insight as to why we prefer our model to drift off of the screen? This introduces more "negative" model pixels, pushing toward the well-supported y-axis are of our model. Possibly this is the result of poor calibration? Or wrong blurring sigma?

TODO: re-run the x/y offset experiment with smaller blurring sigma.

Data Sanity check

To do. Approach: instead of evaluating likelihood pixels against data, dump model/data pixel pairs into a list and (a) plot them or (b) train on them. Maybe just dump to a file and do it in matlab?

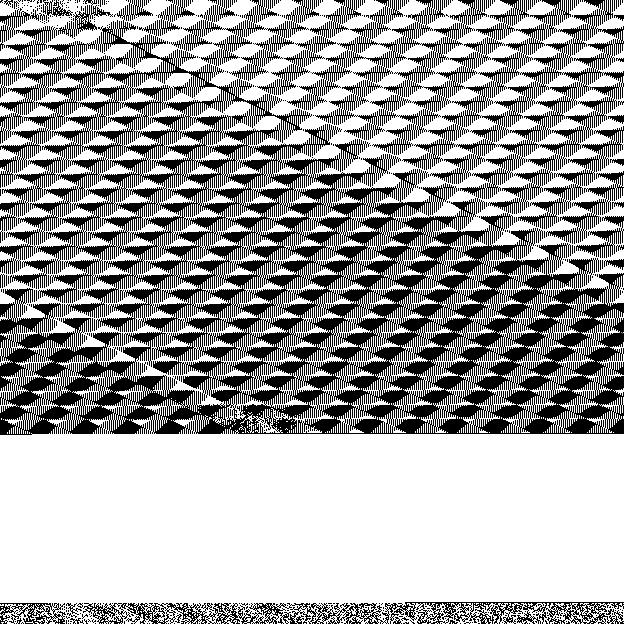

Blooper reel

While writing miscellaneous blocks of memory to disk as images, got some interesting but totally wrong results.

The following is partially due to rendering an array of doubles as floats. Interesting banding pattern. A mini-Mondrian!

The next is just uninitialized memory. Iteresting patterns :-)

TODO (new)

- increase spacing between reconstructed points

- remove edges around image border.

- share blurrer between views

- get this running on cuda server

- why is it crashing on exit?

- Data sanity check - does it roughly match the GMM's distribution?

- train likelihood

- add "dump" mode to bd-likelihood which saves all image pairs to disk for analysis or debugging.

- test empty model likelihood