[Work Log] Experiment - Full-camera linearization

- Write function to make point covariances parallel

- re-run training - did values improve?

- reduced perturbation variance.

- noise variance change?

- re-run reconstruction - are pathologies present?

Okay done. Initial pass at linearization fails to affect training or reconstruciton results. Basically a no-op. Are these results legit? Let's visualize the linearized model's direction vectors to see if we've resolve convergence issues. Is it possible the linearization is being overridden somewhere?

Visualization

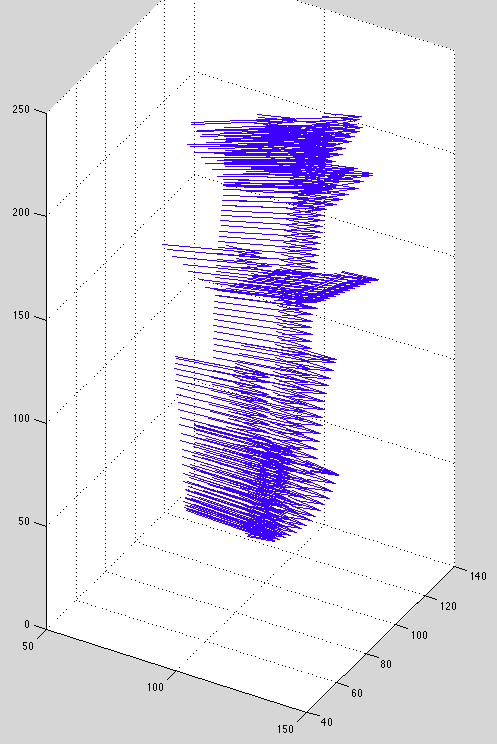

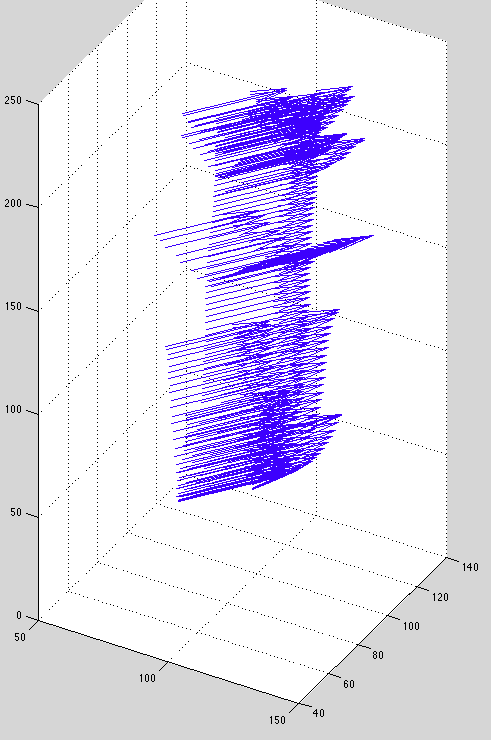

in_progress/visualize_bp_lines.m - quiver plot of backprojection lines, as defined by the smallest eigenvector of the precision matrix.

After plotting, it's clear the mean direction isn't right. Before:

After running "linearize_cameras.m":

Notice how the lines shift upward without explanation.

Found bug: didn't align direction vectors before taking mean. Added dot-product check.

BP lines now look good: parallel versions of originals, minimal shifting.

Test

Re-running reconstruction... no noticible change.

Experiment

Nothing seems to change the reconstruction. I suspect a bug that is nullifying all our changes. Strategy: make a dramatic change and see if reconstruction changes. if not, there's a bug somewhere.

Approach: Change bp-direction eigenvalue from 0 to be the same as the others.

Expected outcome: Drifting in reconstruction should be nearly eliminated.

Outcome: Expected change was observed - drift mostly eliminated.

Next steps: trace the construction of GP posterior covariance, end-to-end.

Exploration

Rolling back changes from last experiment bit-by-bit until desired reconstruction vanishes.

...

Weird, now everything works as expected -- no drift using camera linearization.

Observations

Tried running linearization per-curve instead of per-camera, and the former shows more drifting than the latter but less drifting than per-point. Basically as expected.

Experiment

Run on datasets 7-11.

Outcome: Results seem legitimate.

Cleanup

We have tried several things to fix this drifting issue, all of which mostly failed until now. Now that we've found a cause of drifting and a fix, need to roll back each of the earlier changes one by one.

Re-add index optimization

(Disabled smooth index metaprior, because it is likely to have a bug.)

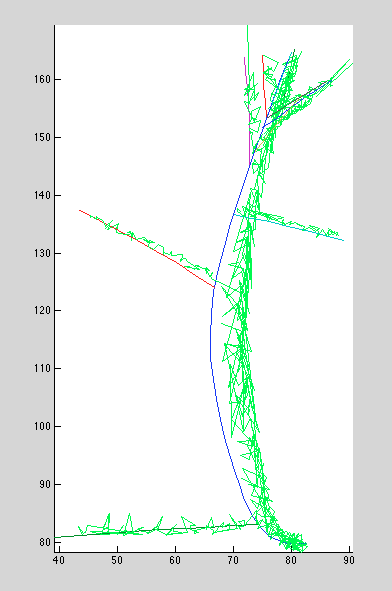

Seems to help some places, hurt others. Some "binding" (?), causing bulging away from data:

Looks red curve has a start index of -2, which is probably causing the bulge. issue with attachment inference code, not index estimation.

In other places, originally over-extended curves are properly trimmed after index optimization. Before:

After:

Training

Re-ran training using linearized cameras, and no notable change in results. This was unexpected; we expected far less perturbation variance, since the linearized cameras don't want to drift anymore.

Ran a manual test, plotting marginal likelihood vs. perturbation variance between the trained value (2.3023e-04) and our anticipated ideal value (~1e-6). Indeed, the optimal marginal likelihood is achieved at lower variances, suggesting the training routine has a bug.

The training routine uses a different routine for computing the likelihood, which may be flawed (possibly due to the new model we're using). Work on it tomorrow.

BUG: training routine assumes precisions are based on noise variance of 1.0. Fixed; no affect on perturb_smoothing_variance.

Training hypothesis - Index compression

Idea: possibly the indices are compressed, requiring deformation (stretching) to fit the data. Thus, training would want the deformation variance to be higher.

What is the best way to get unit-rate spacing of indices?

- oracle reconstruct, chord-length parameterize

- chicken-egg: reconstruct, chord-length parameterize, repeat

- chicken egg w/ independent curves

- heuristic reconstruct w/ independent curves

TODO

- Fix training routine. Goal: perturb_smoothing_variance ~= 1e-6

- compare model #5 vs. model #3 under the parallel camera model

Open issues

How best to compute marginal likleihood during model selection? (point-wise linearized or camera-wise?)

Posted by Kyle Simek